Chef

A conversational assistant for Blue Apron designed to provide a streamlined, personalized, and hands-free cooking experience.

Recognized by Core77's Design Awards as a Student Notable Honoree and for the Community Choice Prize in Consumer Tech.

Team

with Anna Boyle, Deepika Dixit, and Yiwei Huang

Time

7 weeks, CMU Interaction Design Studio Fall 2019

Role

Design Research, Conceptual Design, Interaction Design, Visual Design

Tools

After Effects, Illustrator, Adobe XD

Project Objectives

Our challenge was to design a virtual assistant for a company that has not launched one.

The use of AI continues to grow as it offers efficient solutions to problems facing people and businesses, as well as quick ways of accessing relevant information. In fact, research consultancy Gartner predicts that by 2020, customers will manage 85% of their relationship with an enterprise without interacting with a human.

The Outcome

Meet Chef, a conversational assistant for cooking.

Designed for iOS and Google Nest Hub Max

For a variety of situations

base

listening

speaking

loading/processing

timer on

timer complete

done/celebration

updating

no results

alert

Chef makes personalized meal recommendations based on user preferences and third-party data.

Connects to smart kitchen appliances for a streamlined cooking experience.

Guides multiple users through cooking, delegating tasks based on skill level.

Uses food recognition to log meals and make recipe substitutions.

Customizes future meal recommendations based on personal taste.

Our Process

Exploratory Research

A Virtual Assistant for Cooking

When thinking about situations and experiences that might benefit from a conversational assistant, we landed on cooking. Cooking is task-based, usually requires both hands, and often times a piece of technology. There's an opportunity for a virtual assistant to anticipate and aid with tasks, letting users focus on recipe-making without having to touch a device.

Blue Apron: Cooking for all

We chose Blue Apron because of its service, mission, and strong brand identity. Blue Apron is a recipe and fresh ingredient delivery service that sends subscribers pre-proportioned meal kits. Launched in 2012, the company's mission is to make home cooking fun and accessible to all.

Understanding Brand Identity

To ensure a cohesive virtual assistant, we started by exploring and understanding Blue Apron’s corporate and brand identity. The Blue Apron brand is friendly, yet refined. Its illustrative visual style is clean, fun, personal, and dynamic.

To inform the design of our virtual assistant, we drew three key insights about the company's identity.

Accessible

The brand aims to make home cooking easy for everyone from beginners to culinary wizards.

Wholesome

The company hopes to reimagine the food system by sourcing locally grown ingredients, promoting healthy lifestyles, and creating a community-driven and environmentally sustainable distribution network.

Whimsical

The brand creates a friendly and fun experience through its illustrative visual language and informal tone of voice.

The Current Experience

To inform our design process, we wanted to look into the current customer experience. Given our fast timeline, we talked to people we knew who used the service and watched youtube videos of customers cooking and rating recipe kits.

Our Target User

Through our research of the brand, we found that the largest proportion of the company's user base is US-based women between the ages of 25–34. 82% of account holders are female-sharing meals with partners.

Concept Development

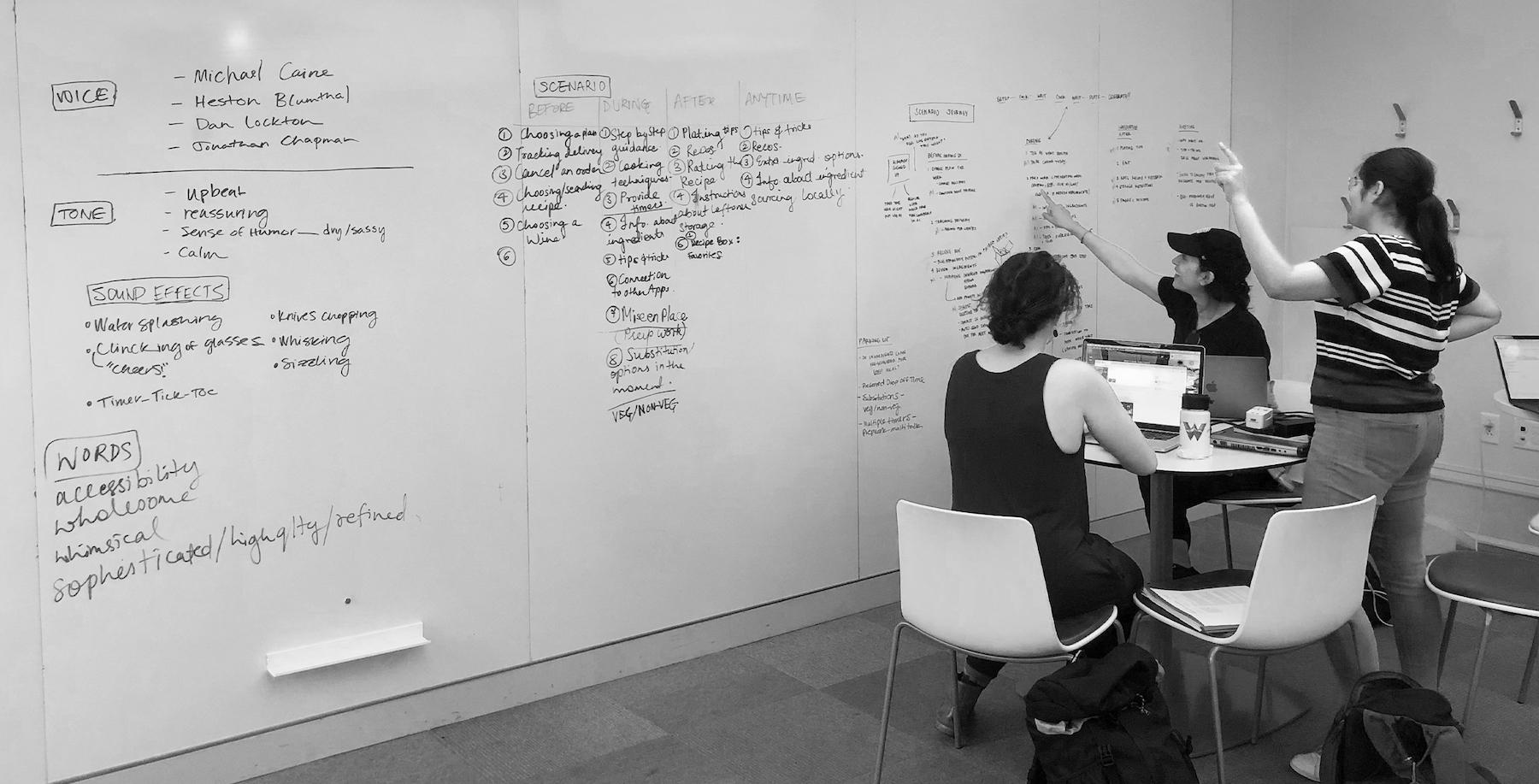

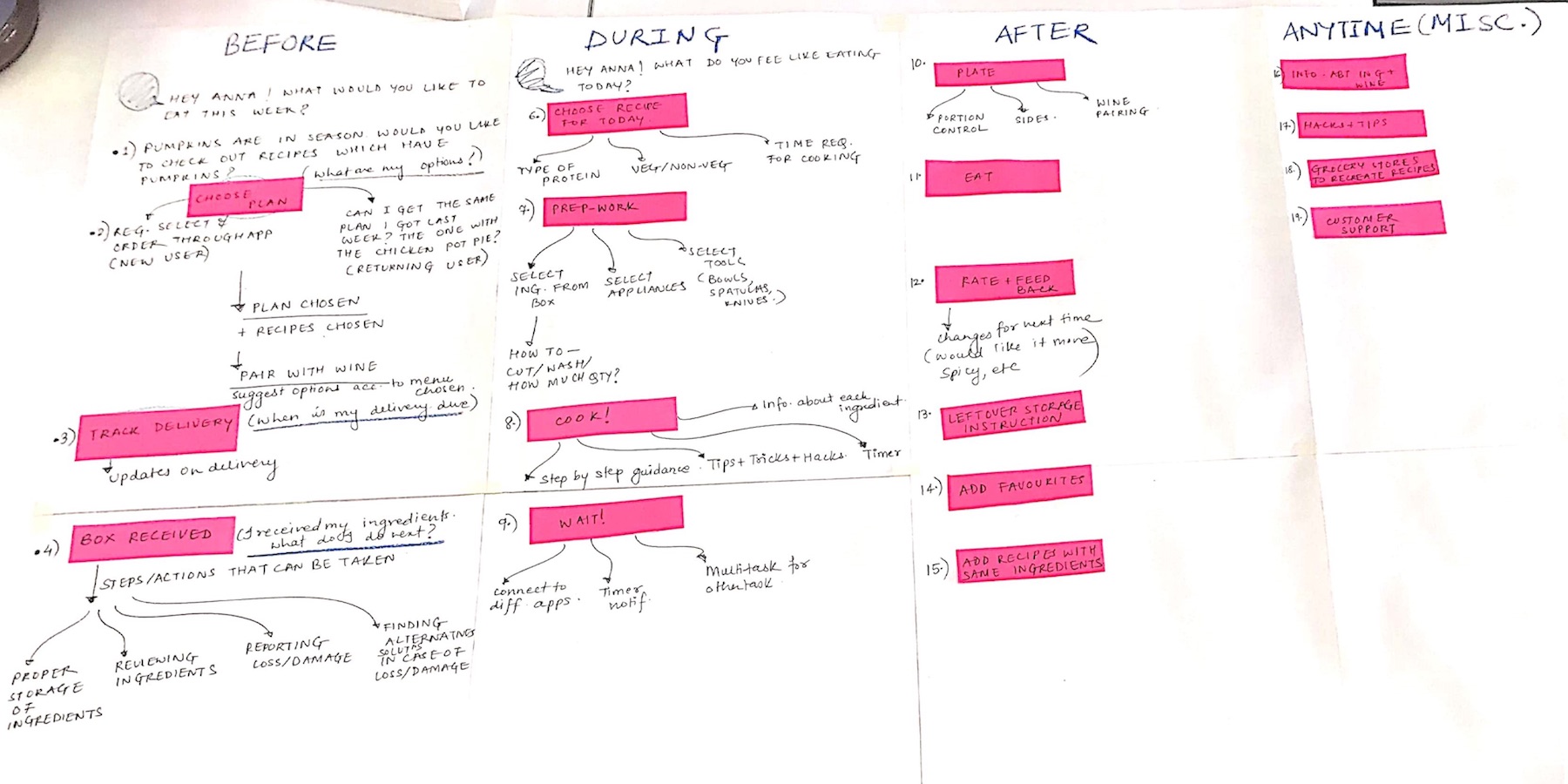

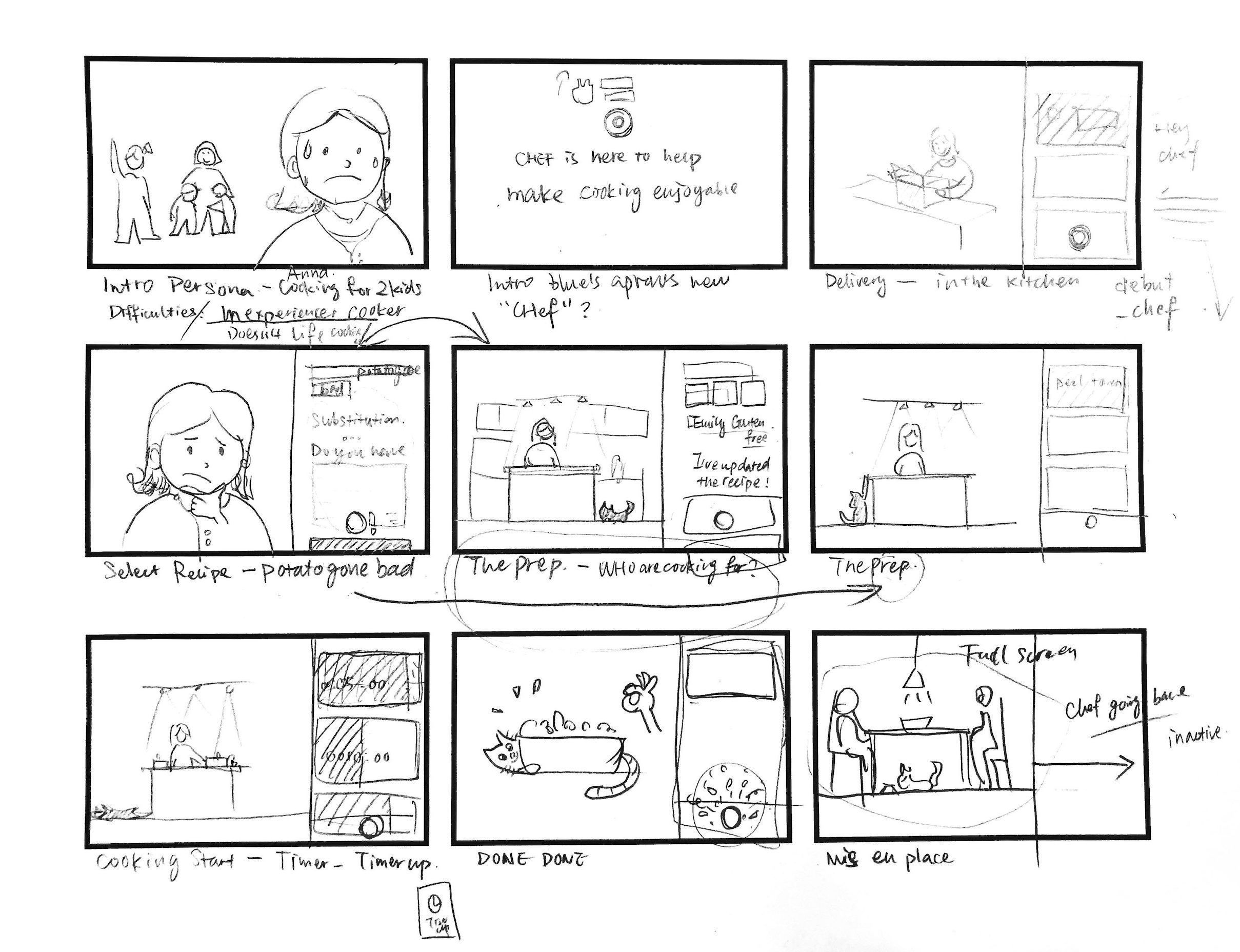

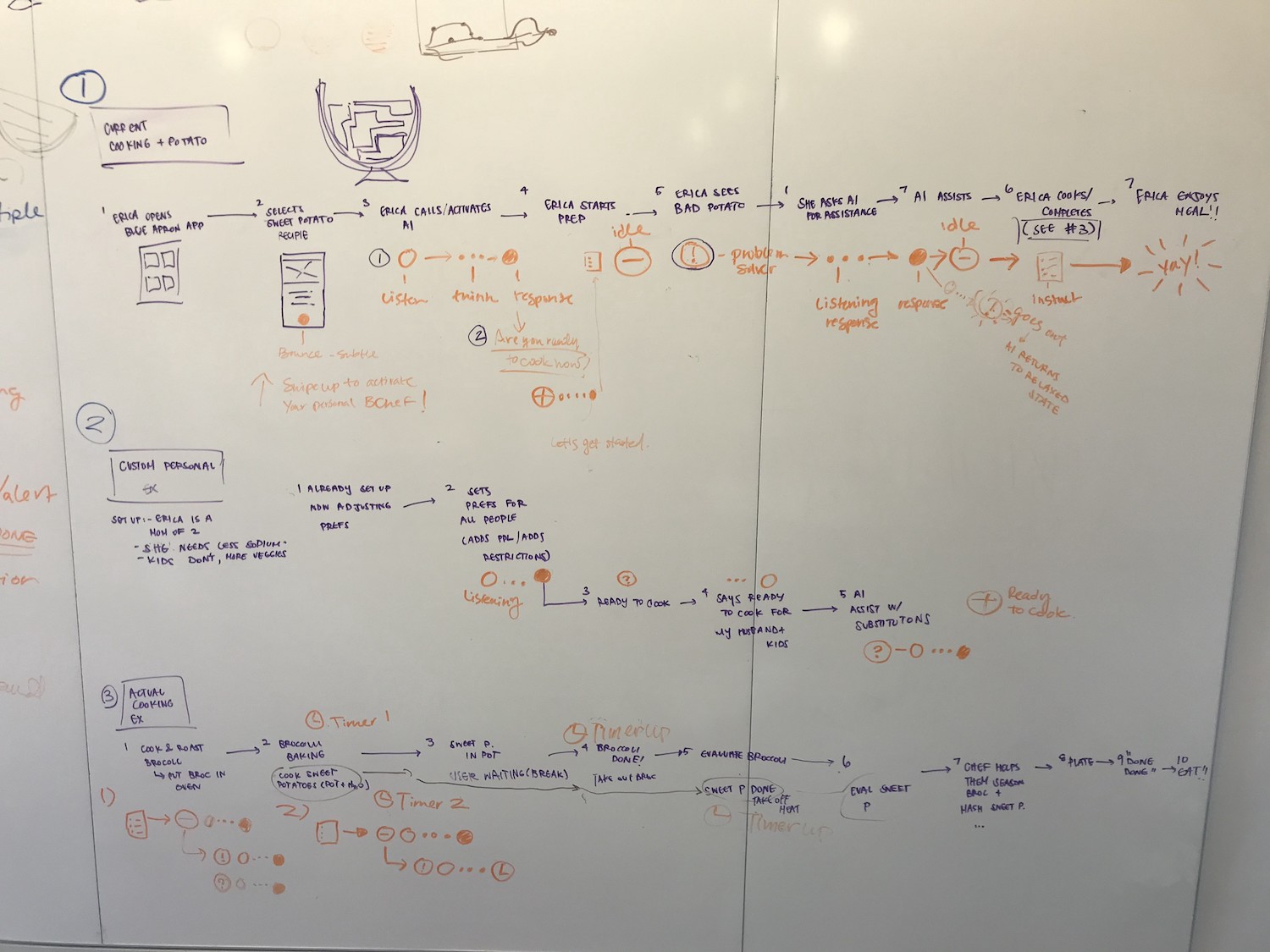

Building Scenarios

To help us think about situations in which an assistant would be useful, we mapped out a journey of a person cooking a Blue Apron meal. This journey included what a person might be doing and how they might feeling before, during, and after cooking. Understanding emotional state helped us think about the way in which our conversational assistant might respond or match a user's mood.

After a few iterations, we focused on three specific scenarios that may benefit most from a conversational assistant.

01

Recipe substitution and modification

A virtual assistant can provide real-time help with ingredient substitutions while cooking. An assistant can also keep a user informed on preferences or dietary restrictions of those they are cooking for.

02

Step-by-step cooking guidance

Our assistant can guide a user step-by-step through meal prep and cooking a recipe.

03

Multi-tasking with multiple timers

While cooking, our assistant can help users anticipate tasks and manage multiple timers.

scenario storyboard

Defining our Virtual Assistant

Design Principles

With research and scenario insights, we defined design principles for the personality, tone, and form of our virtual assistant. We wanted our virtual assistant to be:

Friendly

An interactive, upbeat, and optimistic companion.

Engaged

Proactive and anticipatory.

Encouraging

Stable, reassuring, and guiding.

States

We explored different responses, reactions, or states of our virtual assistant through each stage of our user journey.

v2 VA states mapped to three user scenarios

We found nine key states for our virtual assistant. These states evolved as we moved into defining form, motion, and UI.

01 Base/Idle: Active, but at rest

02 Listening: Listening to a user speak

03 Alert/Issue: Reacting to a problem or task that is incomplete

04 Thinking: Processing a task

05 Timer On: Timer is set confirmation

06 Timer Incomplete: Alert that timer is complete

07 Speaking: Speaking to a user

08 No Results: Search yeilds no results

09 Celebration: User finishes cooking a meal reaction

Form & Motion

When defining Chef’s visual form, we looked to existing virtual assistants for inspiration.

siri

google assistant

cortana

A key theme between many virtual assistants is abstract form. To avoid the uncanny valley by humanizing our AI, we decided to explore abstract representations. We used Blue Apron’s illustrative style as a starting point, exploring both form and motion of common cooking tools.

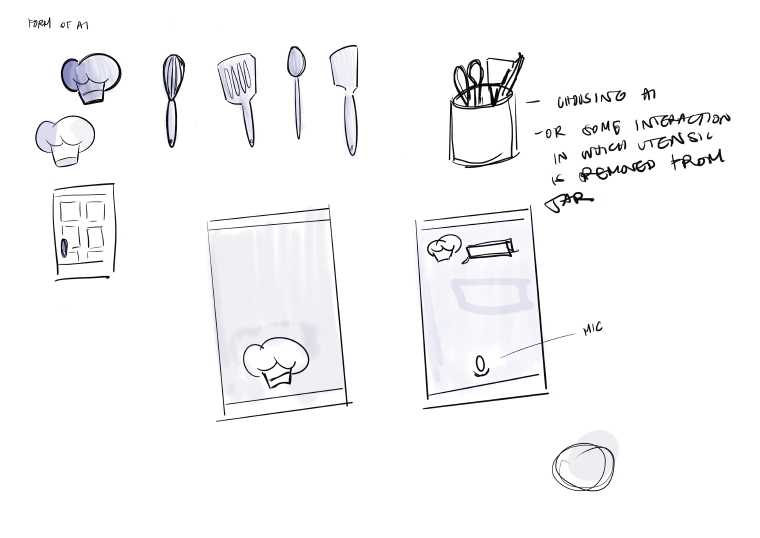

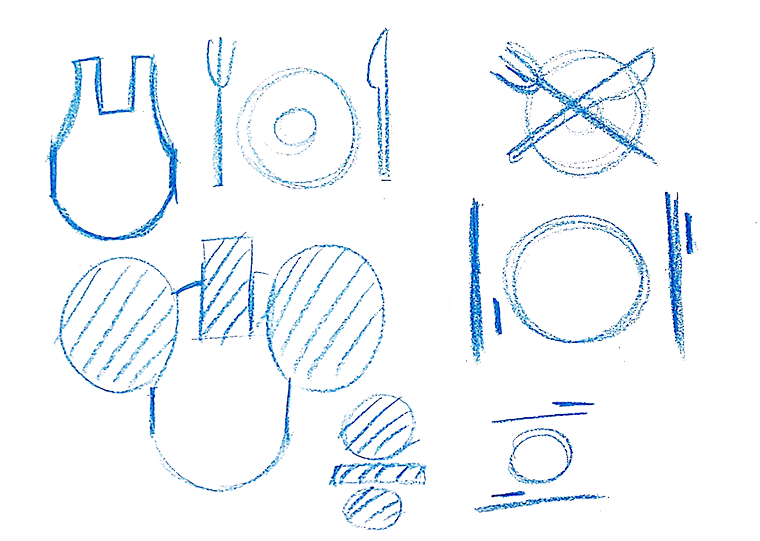

form explorations

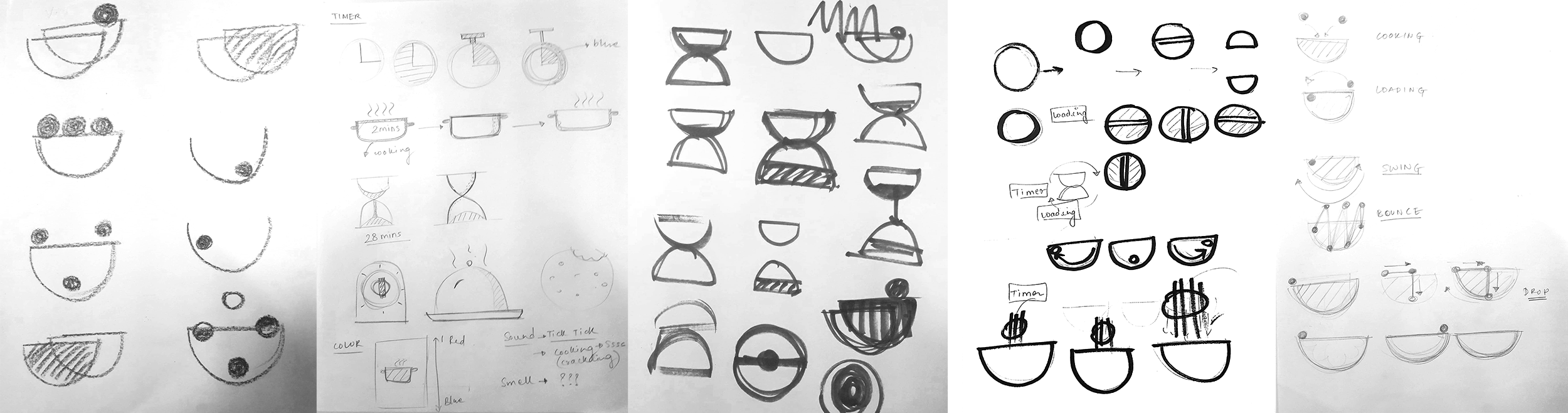

motion explorations

As we explored form and motion together, we quickly realized that we needed to simplify. Too many elements did not always work together cohesively and are hard to see on a small smartphone screen.

We decided to simplify to the shape of a bowl. We landed on three elements: a fill shape and outline shape representing a bowl and a circular shape representing an ingredient. We chose a combination of colors based on Blue Apron’s primary and secondary color schemes.

This form proved to be simple and flexible enough to modify across all states of motion. As we started building out the motion of our states, we animated simple components in After Effects and iterated upon them. Our process was all about starting simple, building up, getting feedback, and refining.

A few motion explorations

Thinking (processing)

We explored a common paradigm of a loading circle. We used simple circles moving in a circular motion as a starting point.

Listening/Speaking

We built on the commonly seen "recording" state and the idea of sending and receiving.

Celebration

Celebration: we started by building upon more fluid, quick, dynamic movement.

Voice & Conversation

Voice is an integral part of our experience. We explored various voices on a scale from human to robotic. We ended up choosing a human female and human male voice as it provides a clearer, reassuring, and less distracting way to communicate information. We also charted out the framework around conversations. This included how users can call up Chef ("Hey Chef") and how Chef might scaffold tasks during cooking guidance.

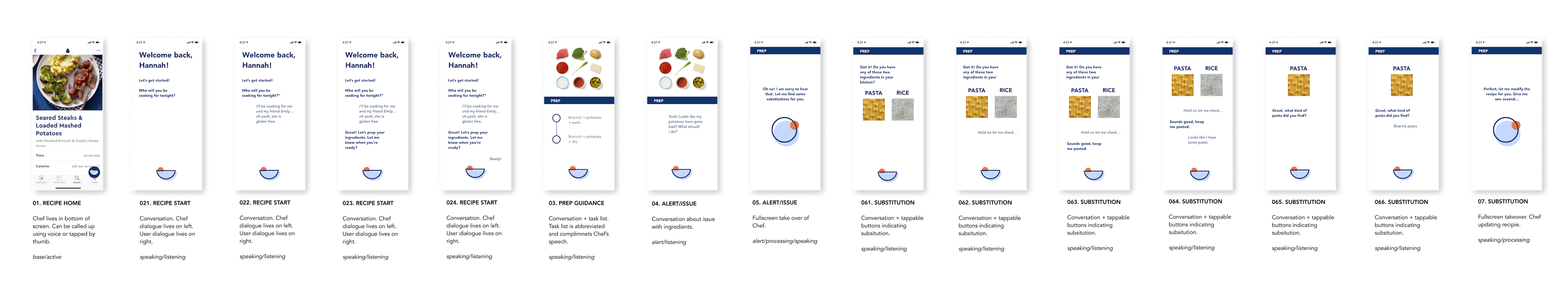

Integrating Mobile

When integrating mobile, we were thinking about the placement of Chef and the structure of conversation and cooking guidance. We decided to place Chef in the bottom section of the screen, leaving more space for abbreviated text to accompany verbal cooking instructions. Text augments but does not mimic conversation. Short, large text ensures that a user moving around a kitchen can listen to Chef announce the next step and look back to their screen from a distance if needed.

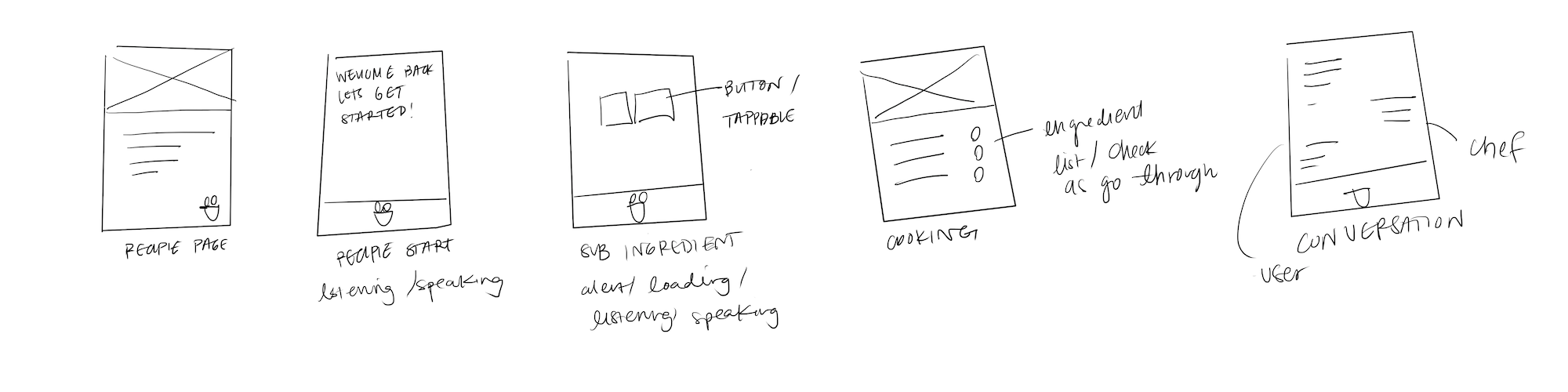

We began by developing templates, then iterating and refining based on feedback and additional research. Over the course of our project, we had 3 key iterations. The first analog and the rest digital.

v1 mobile interaction templates: chef placement, conversation, ingredient task list

v2 high fidelity mobile flow: summary of interactions and Chef's states by scenario

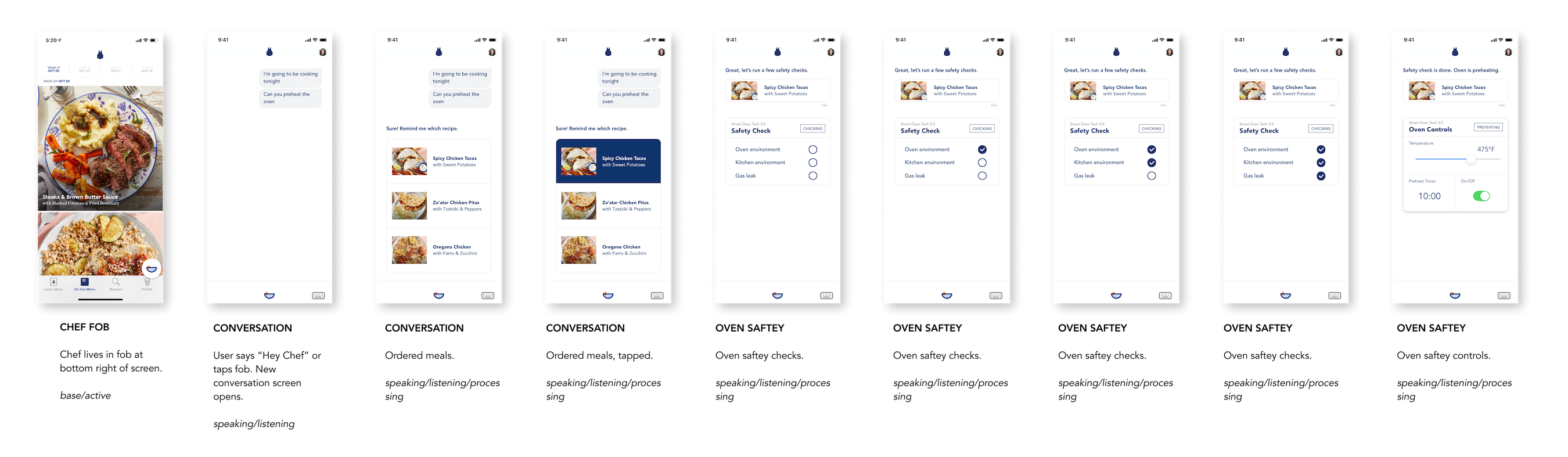

v3 high fidelity mobile flow: summary annotations of 2 new scenarios

Concept Refinement

Test Kitchen

After developing our assistant for mobile, we ran an analog test in an effort to evaluate Chef's usefulness. Anna was the solo cook and I was the Chef.

We took away three insights that informed the next iteration of our design:

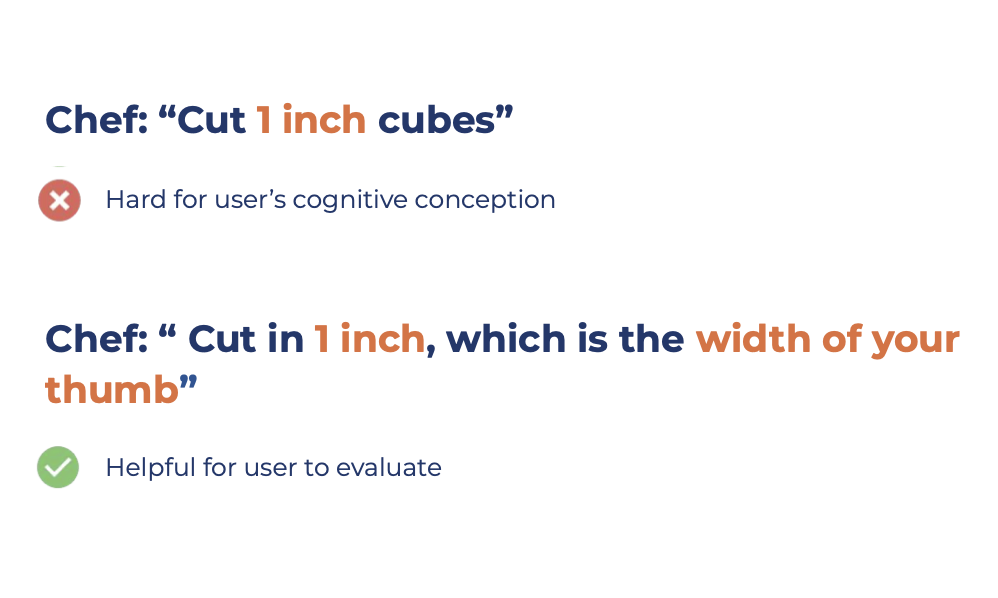

Designing for Conversations

During the cooking experience, our recipe used a number of technical terms for measuring ingredients. Quantity is useful for a screen, but a hard concept to grasp with conversation alone.

We changed our approach to conversational design with phrasing that is more natural and descriptive.

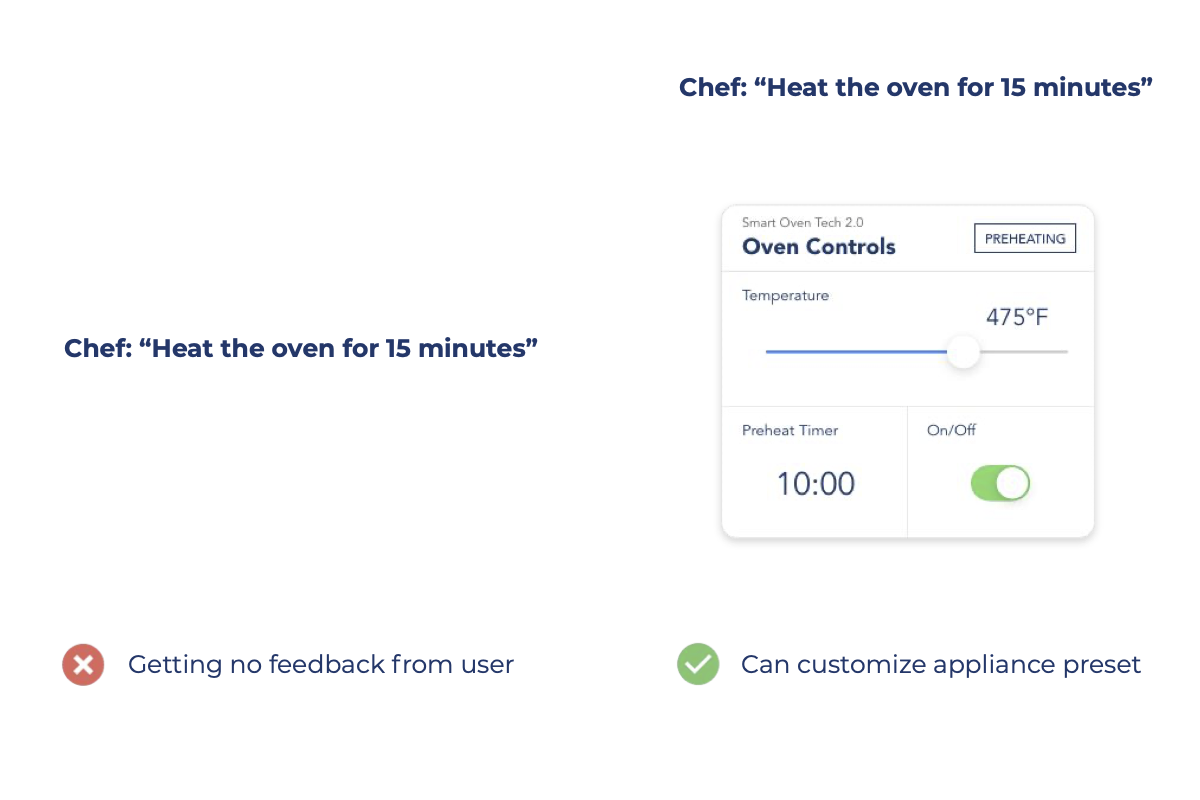

Personalizing Settings

Our oven preheated faster than expected, which ended up cutting cook time in half.

There is an opportunity for Chef to connected to smart appliances, know the presets of that device, and inform users.

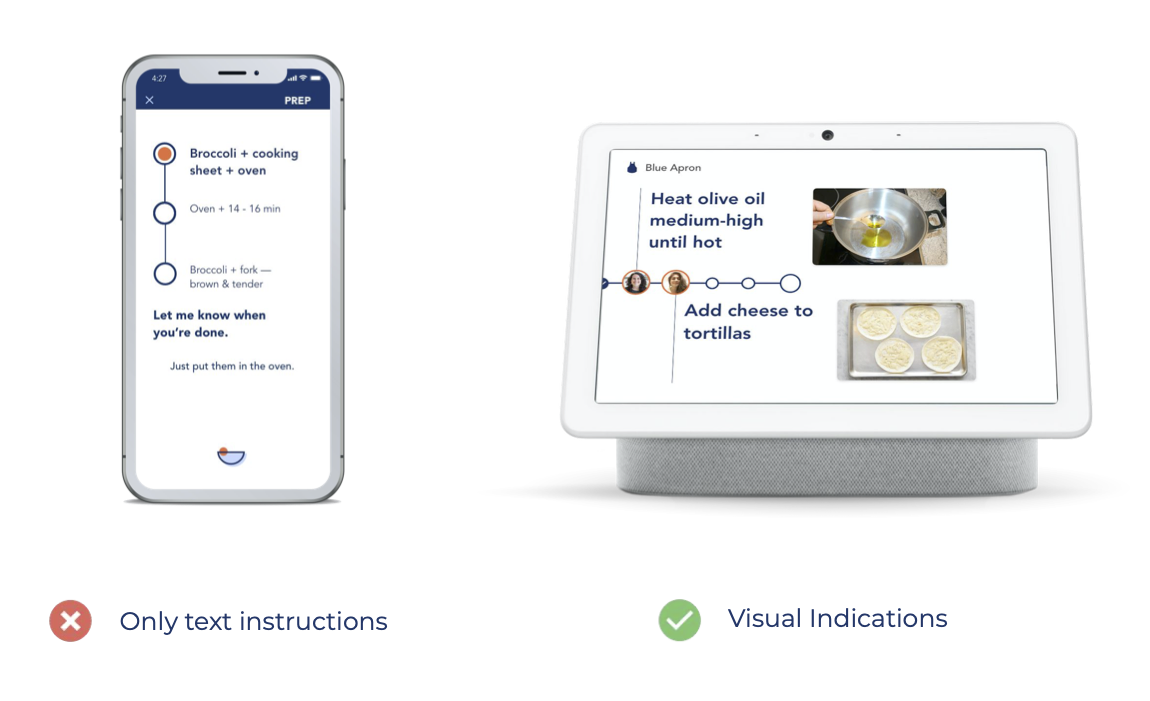

Setting Outcome Expectations

There was also confusion about what our cooked meal should look and taste like.

Our design should provide better context and expectations. It should convey more information visually and audibly by describing what a meal should look and taste like.

Refining Interactions

We also took a closer look at interaction paradigms of existing virtual assistants Google Assistant and Ubisoft’s Sam (a virtual assistant for gaming).

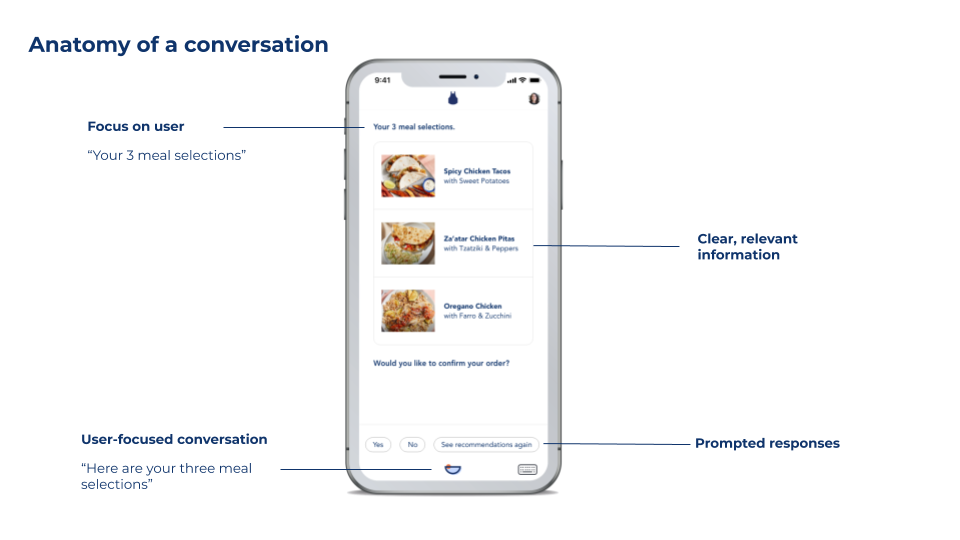

Google Assistant

Harmony between Conversational Design and UI Design

Google Assistant showcases the anatomy and interactions between virtual assistants and users. Based on Google's best practices, we shifted our design to:

- More user-focused conversation (“your” vs. “I")

- Short, simple, concise words that are direct and easy to understand

- Questions that are specific to elicit targeted responses

- Anticipate user responses by quickly surfacing and connecting to relevant data

Ubisoft's Sam

Supporting a Multi-user Experience

Sam makes collective gaming possible by gathering data from multiple users, being context-aware, and providing tips from external sources. This prompted us to think about how can Chef lead multiple users through a cooking experience and be context-aware, while still respecting user privacy and control.

Expanding our Ecosystem

Developing a Multi-user Journey

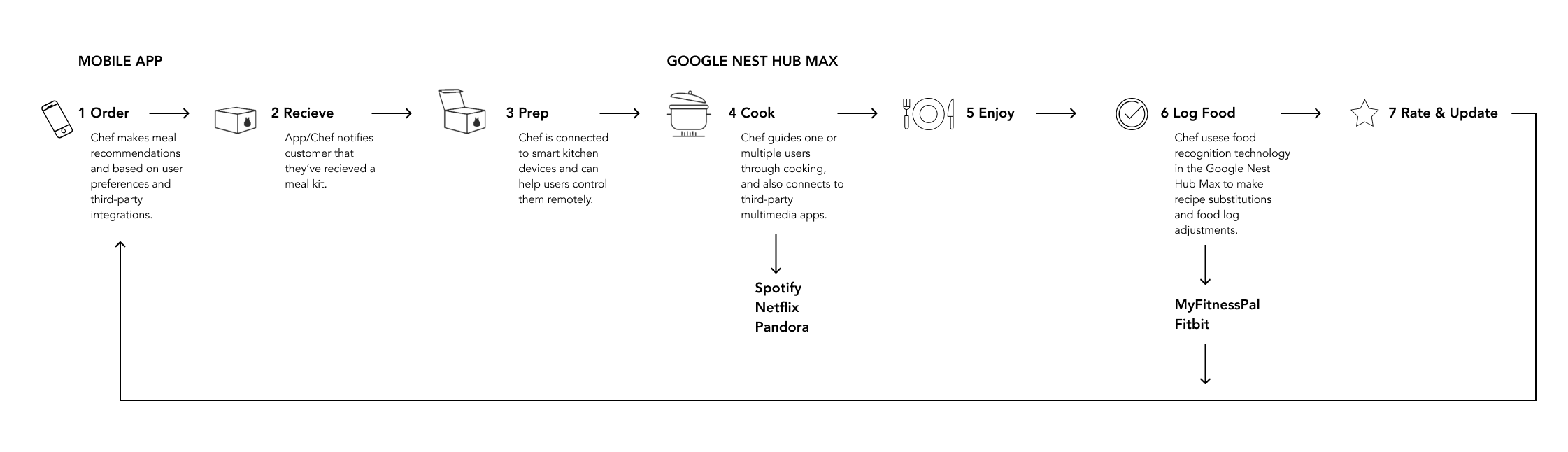

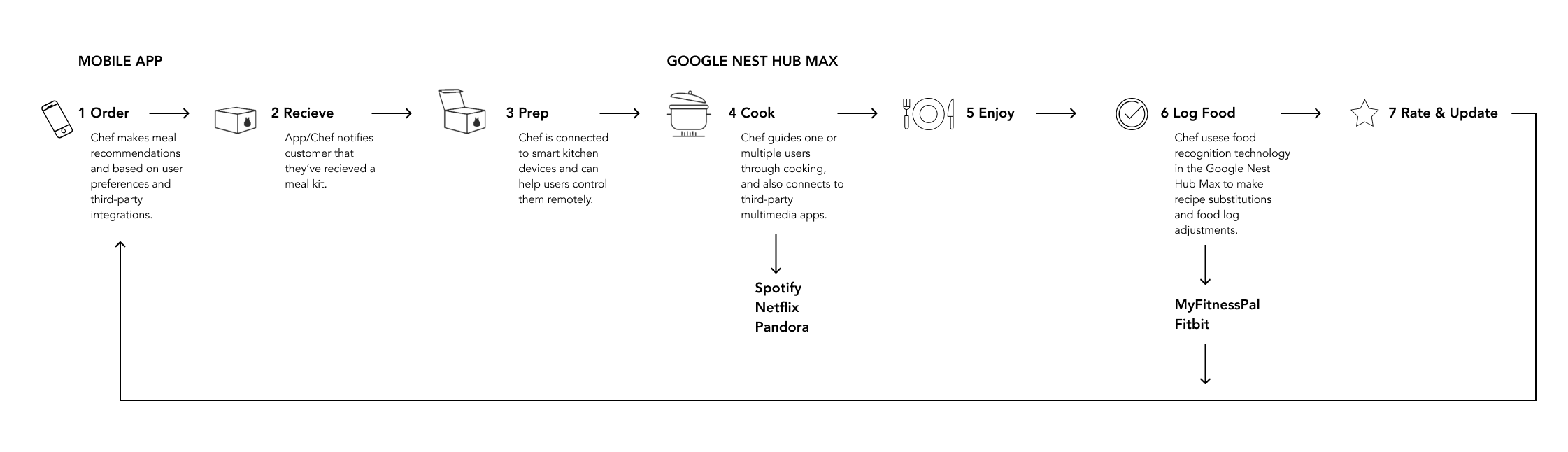

We expanded our ecosystem to support new contexts and multi-user cooking. Chef will support users and their cooking partners through a complete journey from ordering to rating recipes.

expanded ecosystem

Chef will provide meal recommendations based on personal taste and health data, work with smart kitchen appliances for a streamlined prep time, delegate cooking tasks based on skill level, substitute recipe ingredients, and use feedback to improve preferences.

Google Nest Hub Max

We chose to incorporate Google Nest Hub Max into our expanded ecosystem. As a platform, Google Nest Hub Max has a prominent screen, is useful communal spaces, and connects with multiple devices and third-party applications. Other key features include gesture control, facial and vocal recognition, and the subtle presence of Google Assistant.

Developing our UI

We based the UI of Google Nest Hub Max on the UI we developed for mobile. We incorporated a dynamic task timeline showcasing user photos and food outcome photos. Text is prominent and makes up short phrases, referencing the task at hand. Users can also ask Chef to surface reference videos or third-party media apps, using gesture controls to pause and play.

We purchased a Google Nest Hub Max to ensure we were adopting existing macro and micro-interactions patterns on the platform. Our scenarios use vocal recognition, speech recognition, facial recognition, and gesture controls to surface and change information. Chef presence is subtle in order to bring more focus to the cooking tasks at hand.

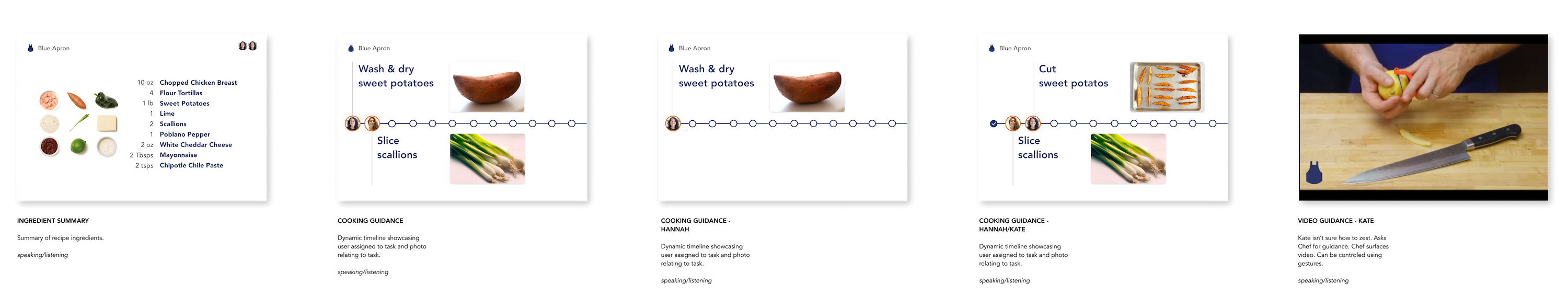

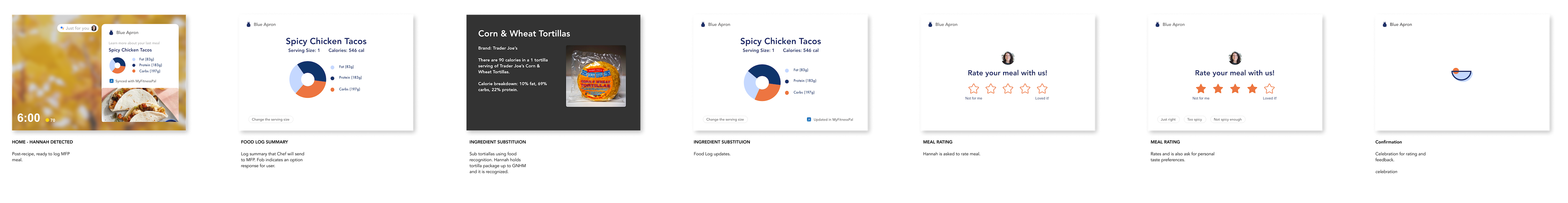

Google Nest Hub Max user flow by scenario

Cooking guidance

Guidance based on facial and vocal recognition. Users initiate the next task or Chef will initiate if enough time has passed. Kate sees a how-to video and can use gesture control to pause/play.

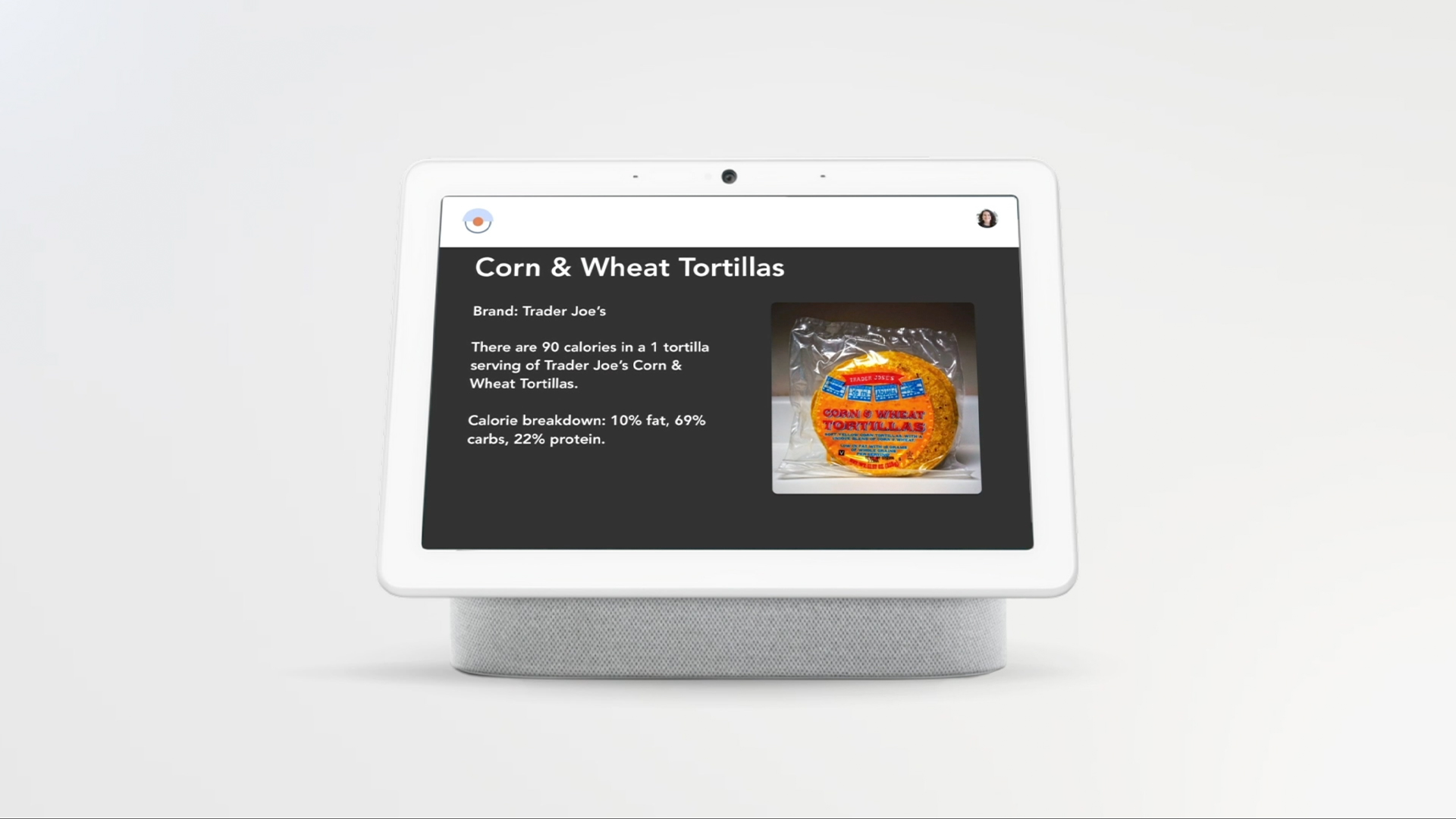

Ingredient Substitution

Chef asks Anna if she'd like to send a food log to MyFitnessPal (3rd party integration). Using food recognition technology, Anna makes a recipe modification to food log.

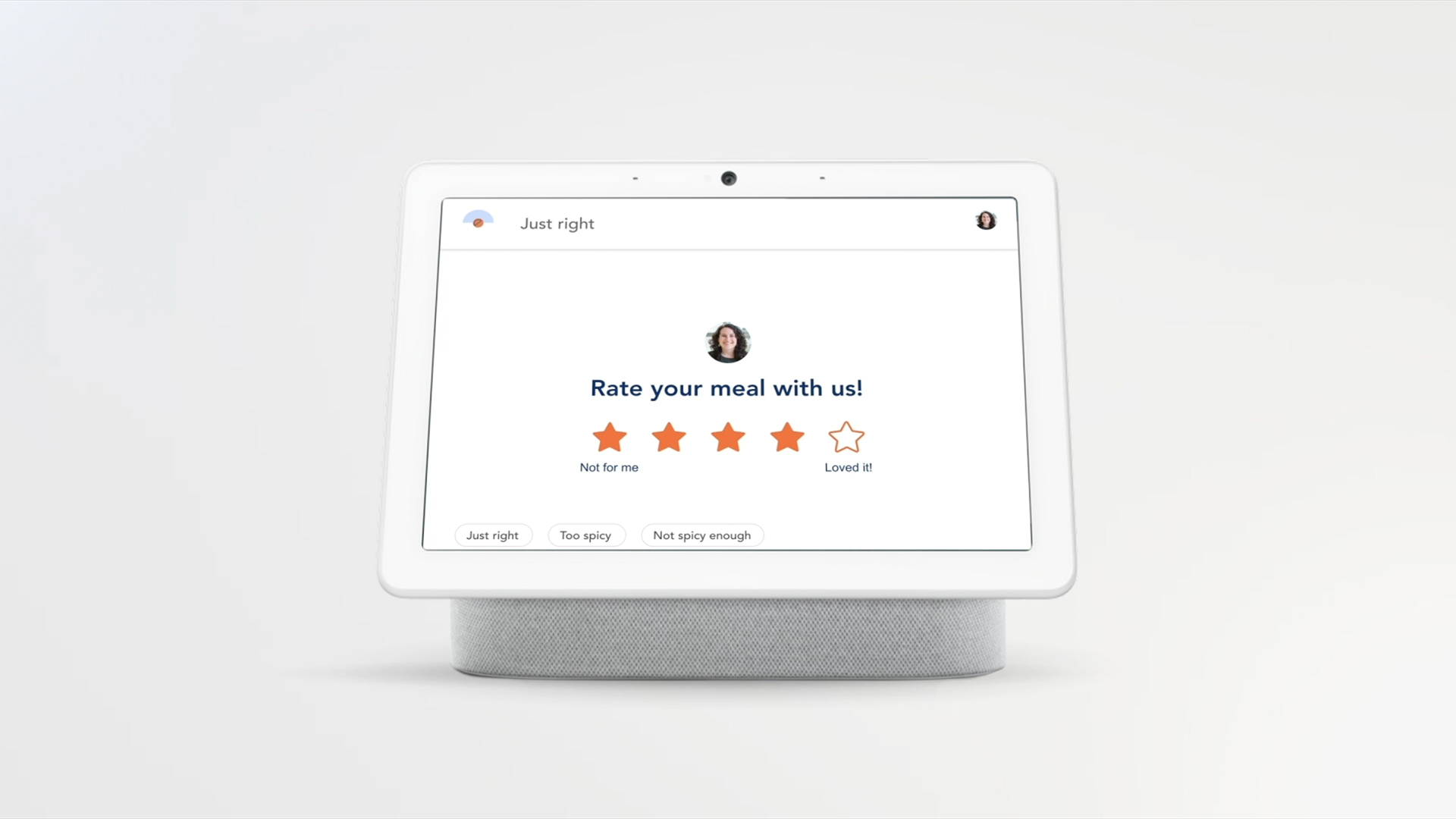

Meal and Personal Taste Updates

Anna can rate her meal and update her personal taste preferences (e.g., spiciness level). These ratings feedback back into meal recommendations that Chef makes each week.

Reflection

Designing for conversations

We learned a lot about user-centered conversations both in terms of interaction and UI. We were inspired by Google’s AI Design Guidelines and used these guidelines to refine our design. This included using short, simple, concise words and prompting users with task-oriented responses. We also adopted the principle of less is always more. An intuitive and clean UI is easier to understand.

Prototyping & Testing

Prototyping and testing are fundamental. We gathered a number of insights from our test kitchen experience that helped inform our expanded ecosystem. Going forward, we would love to continue testing.

Understanding Human Values

When defining the autonomy of Chef, we started thinking about human values and how they factor into what we’re creating. We had some great discussions around user consent, control, autonomy, and communication.

© 2022 — Amrita Khohsoo