Time [in]Material: A Portal for Spatial Memories

An early work-in-progress, exploratory project about presence and memory through an interactive, multisensory installation.

Team

with Christianne Francovich, Isabel Ngan, and Rachel Arredondo

Time

10 weeks, CMU hyperSense course taught by Dina El-Zanflay, Fall 2020

Role

Design Research, Conceptual Design, Interaction Design, Prototyping, Technical Implementation

Tools

Raspberry Pi, XBOX Kinect, RGB LED Matricies, Open Source software, Figma

Project Objectives

We pass through common and shared spaces in our everyday life, yet these spatial experiences are lost to time. With a focus on a research-through-design methodology and physical computing, we explore ways of materializing these fleeting spatial interactions to create a tangible sense of connection to time and memory.

The Outcome

Using a blend of physical computing and computer vision, we designed and prototyped a public installation that explores presence, temporality, and memory through embodied, sensory-based interactions.

Our prototype and process were largely exploratory. Throughout this project, we expanded our understanding of design, tangible interactions, and technical implementation.

By prototyping multiple modalities, we investigate the interconnectedness of objects and the environment and its influence on one’s perception of time and space.

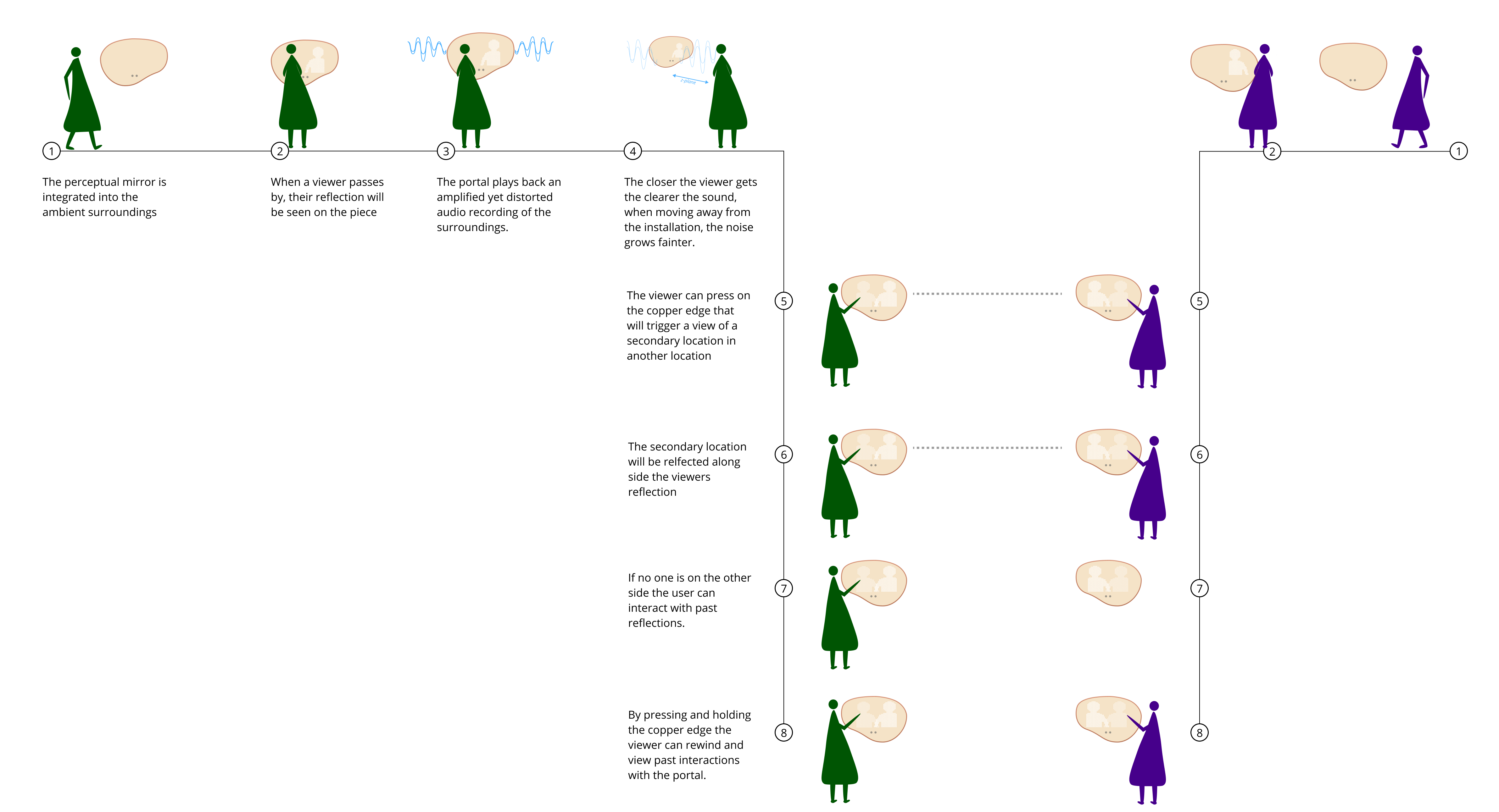

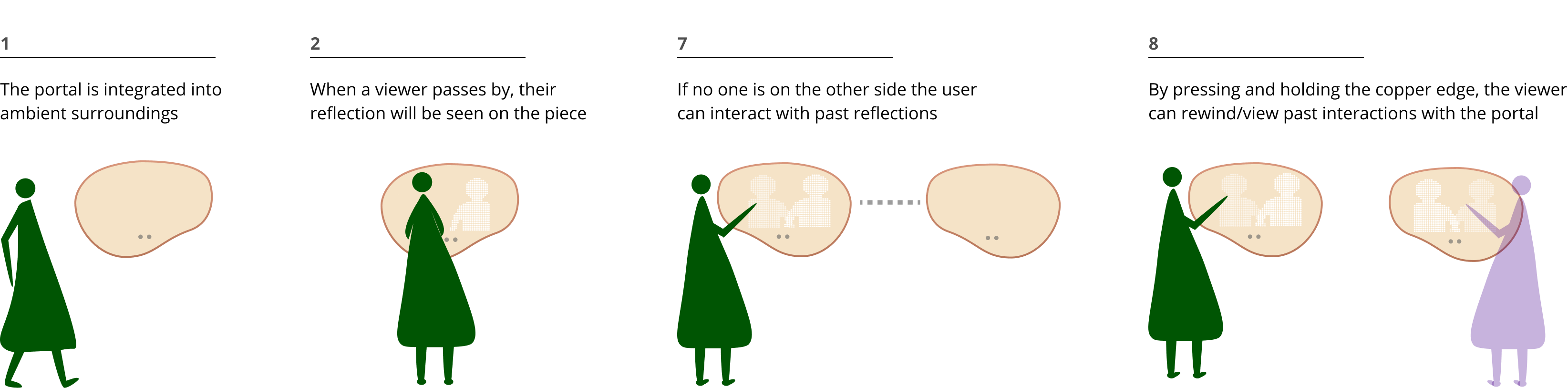

User Journey

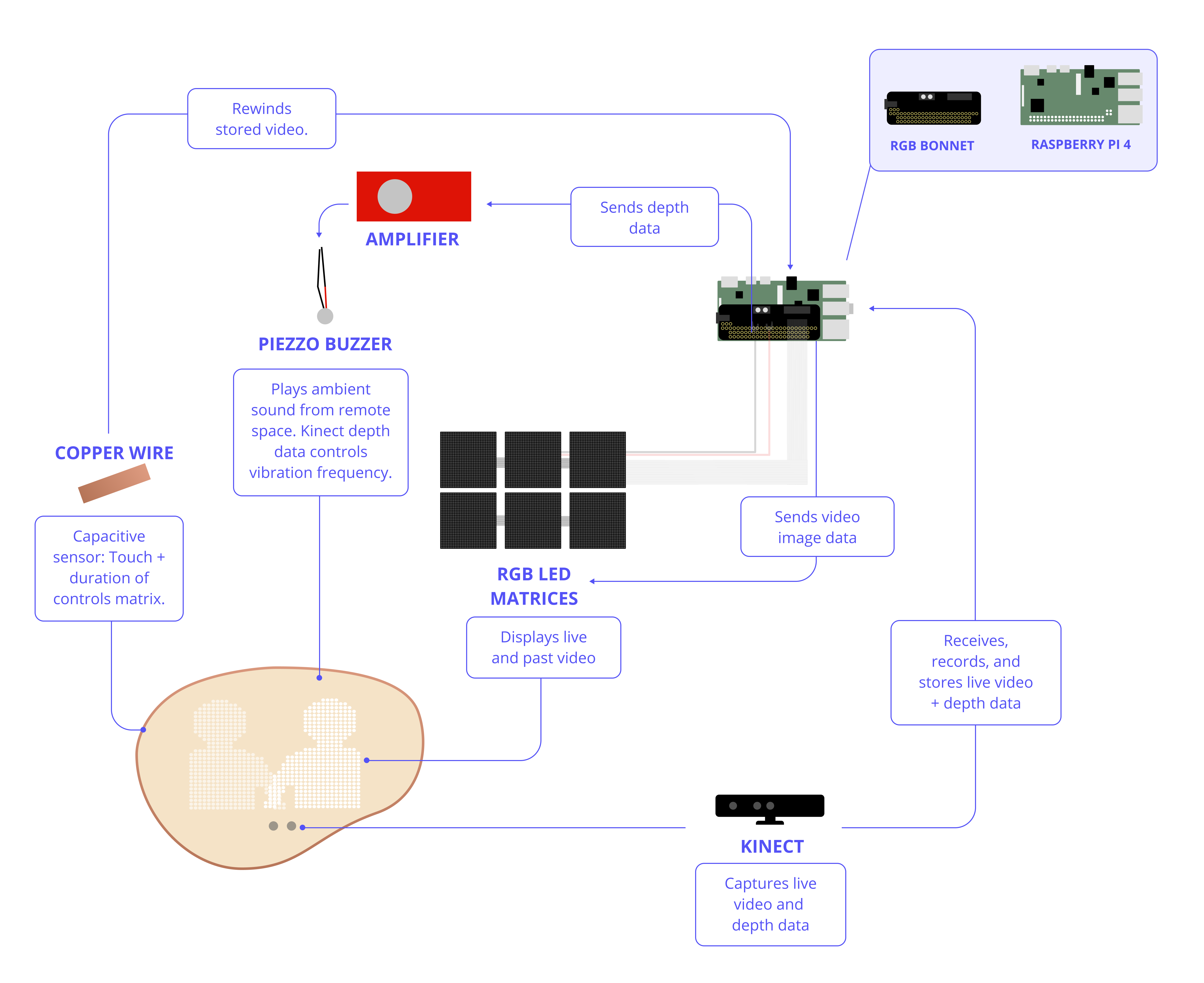

Technical System

To create working prototypes we used a combination of hardware and open-source software (full details can be found in the Prototyping section).

Concept Development

After a few rounds of brainstorming, we decided to focus on making telepresence more sensorial. We were interested in the concept of temporality and how we might materialize, feel connected to, and interact with the lingering memories of spaces.

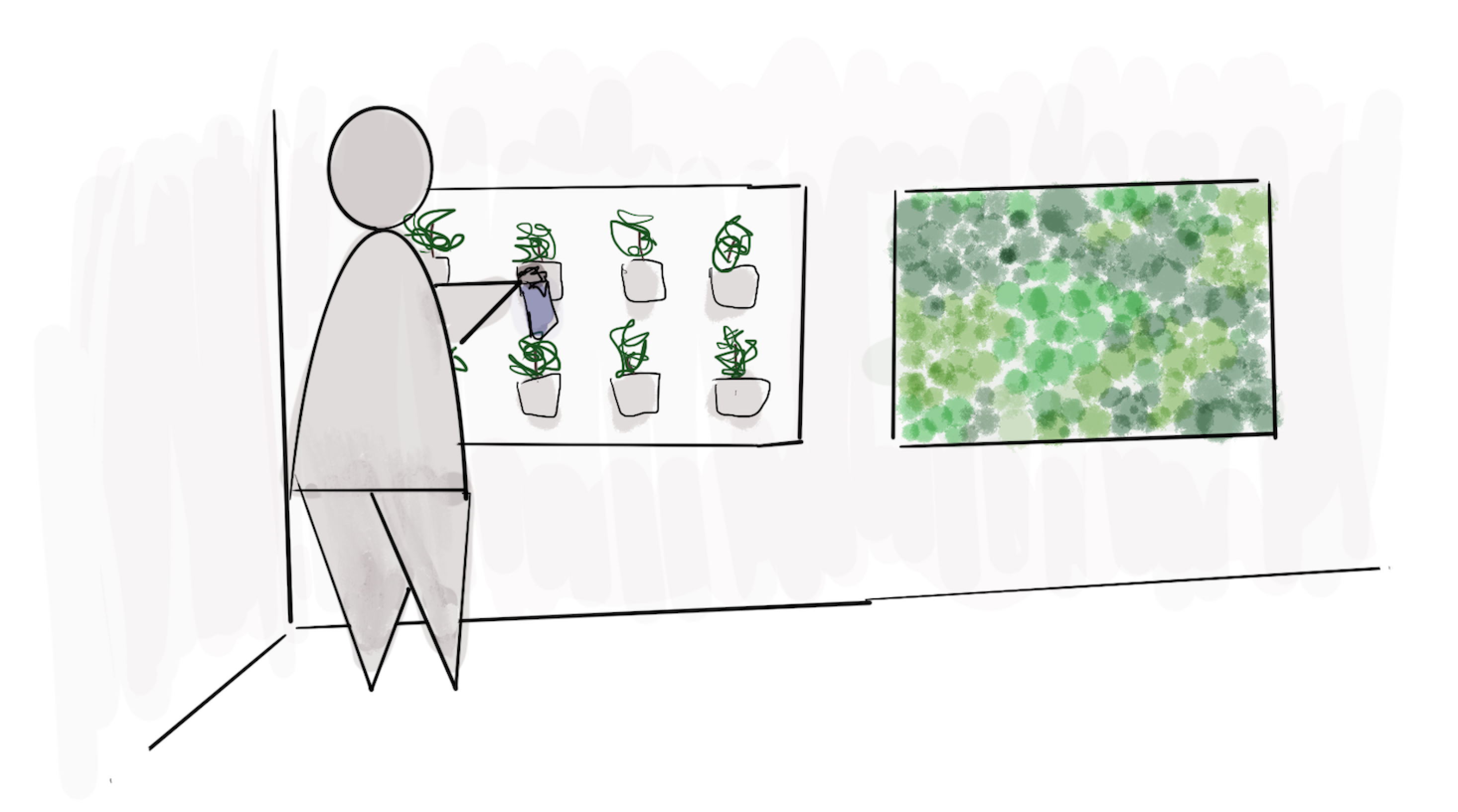

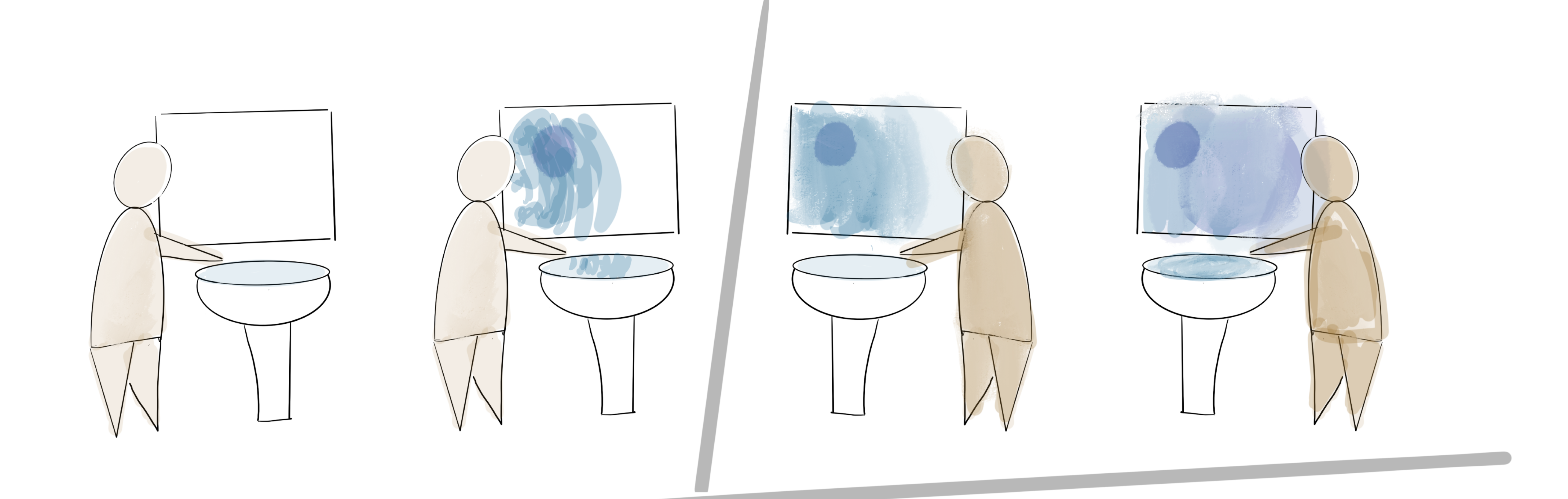

Our early explorations (a few below) developed these ideas in the form of wall installations physicalizing time and presence using tactile interactions, ambient sounds, and abstract projections.

Listening to sounds of sister spaces captured in plants through tactile and audio-based interactions

Two spaces: Sand, water, and flowing/layered projections to interact with a secondary space

Mirror displays distorted projections/shadows of a remote space into current space via an installation

Interacting with time: Rewind images/sounds with physical gestures or movements

Literature + Artifact Review

In parallel, we also looked at literature + artifacts to better understand temporality, phenomenology, cognitive psychology, and embodied sense-making. A few works we engaged with:

- What Can a Body Do?: How We Meet the Built World by Sara Hendren

- The Perception of the Environment: Essays on Livelihood, Dwelling, and Skill by Tim Ingold et al

Being Outside the Dominion of Time by Benny Shannon

Interactive Public Ambient Displays by Daniel Vogel and Ravin Balakrishnan

Concept Refinement

We continued developing an installation that surfaces traces of images and ambient sounds from a sister-space. The basis for our concept is the idea that objects and spaces “remember” and are encoded with past interactions, the sounds of those who were there before, the touch of those in another location, every interaction building on the last.

Initial circular form + interactions: rotate portal display to access another space + press into portal display to rewind time

In our installation, a viewer can see themselves first as a reflection. As they approach the portal, they can access memories of the past using gesture-based interactions; their reflection is then layered onto past reflections.

Refined Interactions + User Journey

After exploring potential technical routes, we decided to change the portal's form to a hanging wall installation.

All primary interactions between connected objects

Prototyping

In order to understand the dimensions of our concept, we developed a series of experiments in the form of working prototypes to investigate the interconnectedness of objects and the environment and its influence on one’s perception of time and space.

We prototyped in small, iterative stages. This helped us navigate new technical knowledge (python!) required to create working prototypes across our installation’s range of sensory-based interactions.

This semester, we were able to prototype four key interactions from our user journey.

prototyped: form, reflection, interaction with past, sound

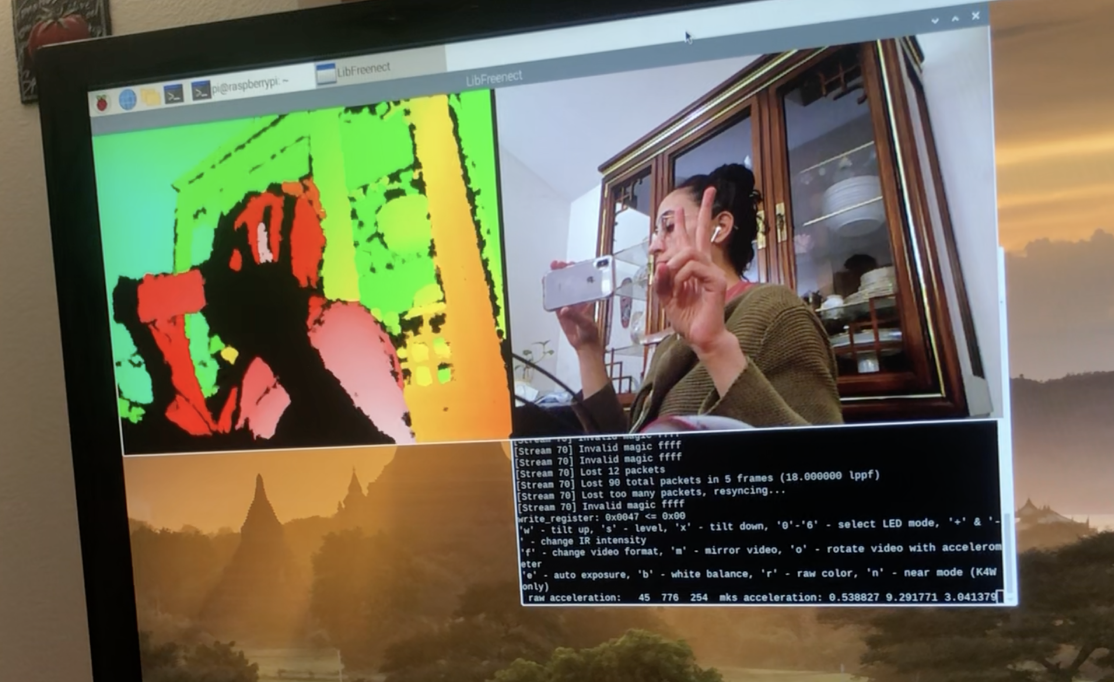

Prototyping Vision: Capturing and mapping body position to an output

We started by exploring existing projects that captured similar interactions or ideas. One common technology across projects was the XBOX Kinect, a sensor that captures RGB image and spatial body position data. We used the Kinect as a starting point, exploring different tools that might work alongside it.

Kinect pointcloud: Initial test using Processing

After additional research, we landed on three pieces of hardware:

XBOX Kinect

To capture visual and spatial body position data

Raspberry Pi

To processing captured Kinect data

RGB LED Matricies

To output visual data

The Kinect and RGB LED matrices work well with existing open-source libraries and the Raspberry Pi has increased computational power to process image videos.

The hardware setup

01 Vision: Sending Kinect depth information to the Raspberry Pi

Our first step was to translate Kinect depth data to the Raspberry Pi using two open-source libraries, OpenCV (computer vision) and OpenKinect (kinect driver). After some time, tutorials, and additional code refinements, we were able to successfully run these programs.

OpenKinect Library capturing Kinect depth data

02 Vision: Displaying live Kinect depth data onto the RGB Matrix

We spliced together and refined code from the Rpi-rgb-led-matrix open-source library and additional online resources. Our vision was to translate Kinect depth data into a black and white image. This type of visual became more suggestive than explicit, which our team felt captured temporality in a way that would be more realistic.

Live depth data display displaying visually on the matrix

03 Vision: Displaying and interacting with past Kinect depth video on the LED Matrix

Our next step was implementing interactions around spatial memory and temporality. In terms of python, this required more complexity. We needed to:

01

Store recorded depth video from the Kinect on to the Raspberry Pi.

02

Create a UI/control for a rewind interaction: This UI served as a first-step proxy for the installation’s capacitive sensor. We created a UI to display on the Raspberry Pi monitor with a trackbar that enabled the rewinding of stored video.

03

Superimpose past and present video on the RGB LED Matrix: This required an alpha blend mask of past video onto live video.

Superimposition of live and past video, trackbar UI, python code

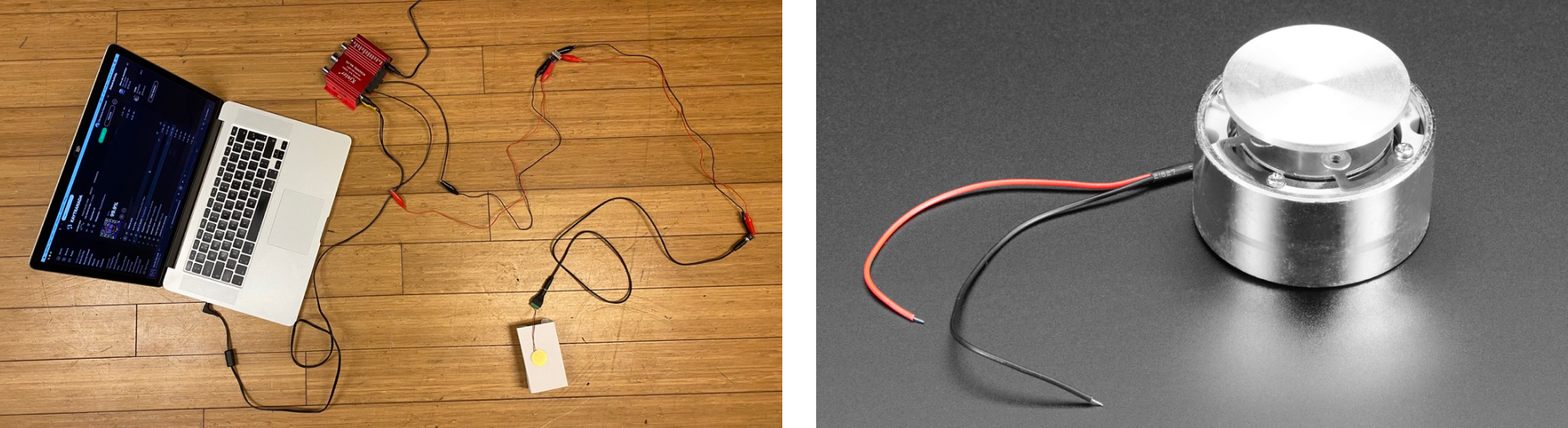

Prototyping Sound: Capturing + Playing Ambient Noise

We wanted to find a way to use the natural vibrations of the surrounding space to playback sound. We started by using a piezo buzzer with a separate transducer attached to an amplifier. However, this resulted in a vibration that was too soft. For our second iteration, we switched to a much larger surface transducer.

V1 Piezo Buzzer, Right: V2 larger surface transducer

Secondly, we wanted viewers to trigger volume by proximity to the installation. We prototyped this first using an ultrasonic sensor and later using the Kinect’s depth data to trigger the sound. This required a few equations with max depth and min depth to ensure that sound scales up or down as someone approaches or moves away from the installation.

V1 Test: Kinect depth data triggering sound (for video above: sound on)

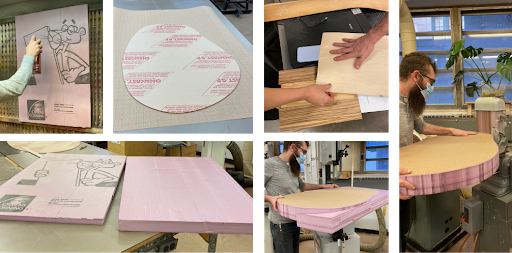

Prototyping Form

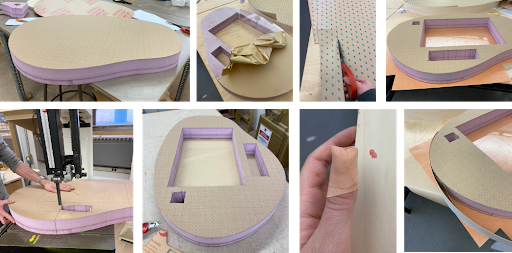

The final prototype was constructed from foam, acrylic, and wood veneer. We began by testing different veneer colors and thicknesses and finally settled on a light Maple variant. Secondly, we had to cut out our portal shape in acrylic using a laser cutter. We also had to cut the same shape in the foam, which we, thankfully, had help from Josiah Stadelmeier from CMU's 3D Lab. Once the foam was cut, we cut out spaces for all the electronic components. The final step was putting everything together: sticking the acrylic to the foam and adhering the veneer onto the acrylic top and foam sides.

Form process photo diary

Next Steps

With the main prototype working, we now want to focus on testing potential viewers' perceptions of interactions to guide refinements.

We also hope to explore different environments this portal will reside in. For example, how might this peice interact within both public spaces and more intimate settings, such as the home? Based on this choice, the interactions would be thoroughly refined, potentially guiding us in altering the form.

Beyond the piece itself, we are interested in exploring how people with disabilities could interact with our concept, pushing our design to be more accessible. The sound component would allow people with low vision to interact with the portal; as next steps, we want to think about how we can make the color, interaction, and height more accessible.

Reflection

From concept to implementation, our team took on a steep learning curve to bring this concept to life. We expanded our understanding of design, sensory-based interactions, and technical implementation. We were able to scaffold our learning process by prototyping iteratively and in stages. This approach helped us evaluate our ideas, learn new technical knowledge, and determine best pathways forward.

We're proud of the explorations and prototypes we were able to achieve in 10 weeks with a fully remote team. A big thanks to our Professor Dina, our TA Matt, and my brother Sid.

© 2022 — Amrita Khoshoo